Optimized Deep Convolutional Neural Network for Robust Occluded Facial Expression Recognition

DOI:

https://doi.org/10.62019/dpfhnf43Keywords:

Histogram of Gradients, Facial Expression Recognition , Occluded Faces, Emotion Detection, CNNAbstract

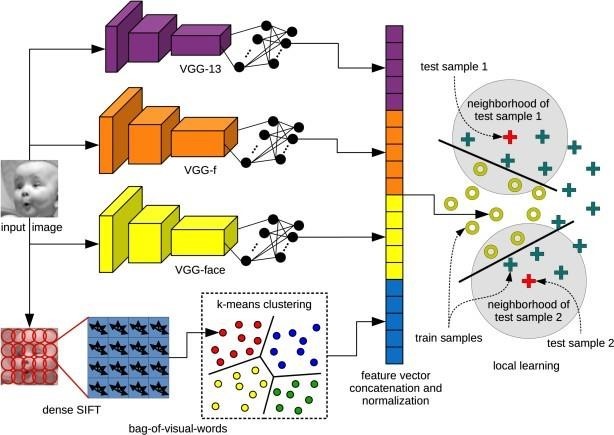

Occluded facial expression recognition (OFER) poses a formidable challenge in real-world applications, particularly in human-computer interaction and affective computing. Despite recent advancements, existing methodologies often struggle to maintain optimal accuracy under occlusion constraints. This study proposes a novel hybrid framework that synergizes handcrafted and deep learning-based features to enhance robustness and precision in emotion recognition. Specifically, we integrate Histogram of Oriented Gradients (HoG), facial landmark descriptors, and sliding window-based HoG representations with deep convolutional neural network (CNN) features, leveraging their complementary strengths. Our experimental design explores multiple feature fusion strategies, including CNN-based automated classification and a hybrid model incorporating Dlib-extracted landmarks with HoG-CNN integration. Comparative analysis against state-of-the-art approaches demonstrates that our multi-feature fusion technique significantly improves recognition accuracy, achieving a remarkable 96% accuracy on benchmark datasets such as RAF-DB and AffectNet. However, we observe a marginal decline in performance with increased dataset complexity, emphasizing the need for scalable solutions. This research underscores the efficacy of integrating handcrafted and deep learning-driven features, offering a promising direction for advancing occlusion-robust facial expression recognition in dynamic environments.

References

M. Garg and R. S. Prasad, Eds., *Affective computing for social good: Enhancing well-being, empathy, and equity*. Springer Nature, 2024. DOI: https://doi.org/10.1007/978-3-031-63821-3

[2] C. Sirithunge, A. G. B. P. Jayasekara, and D. P. Chandima, “Proactive robots with the perception of nonverbal human behavior: A review,” *IEEE Access*, vol. 7, pp. 77308–77327, 2019, doi: 10.1109/ACCESS.2019.2922054. DOI: https://doi.org/10.1109/ACCESS.2019.2921986

[3] L. Zhang, B. Verma, D. Tjondronegoro, and V. Chandran, “Facial expression analysis under partial occlusion: A survey,” *ACM Comput. Surv.*, vol. 51, no. 2, pp. 1–49, 2018, doi: 10.1145/3158230. DOI: https://doi.org/10.1145/3158369

[4] A. R. Khan, “Facial emotion recognition using conventional machine learning and deep learning methods: Current achievements, analysis and remaining challenges,” *Information*, vol. 13, no. 6, p. 268, 2022, doi: 10.3390/info13060268. DOI: https://doi.org/10.3390/info13060268

[5] V. S. Amal, S. Suresh, and G. Deepa, “Real-time emotion recognition from facial expressions using convolutional neural network with Fer2013 dataset,” in *Ubiquitous Intelligent Systems: Proceedings of ICUIS 2021*, Springer Singapore, 2022, pp. 541–551. DOI: https://doi.org/10.1007/978-981-16-3675-2_41

[6] A. Bilal *et al.*, “Improved support vector machine based on CNN-SVD for vision-threatening diabetic retinopathy detection and classification,” *PLoS One*, vol. 19, no. 1, p. e0295951, 2024, doi: 10.1371/journal.pone.0295951. DOI: https://doi.org/10.1371/journal.pone.0295951

[7] N. Khan, A. Singh, and R. Agrawal, “Enhancing feature extraction technique through spatial deep learning model for facial emotion detection,” *Ann. Emerg. Technol. Comput. (AETiC)*, vol. 7, no. 2, pp. 9–22, 2023, doi: 10.33166/AETiC.2023.02.002. DOI: https://doi.org/10.33166/AETiC.2023.02.002

[8] T. Wehrle, S. Kaiser, S. Schmidt, and K. R. Scherer, “Studying the dynamics of emotional expression using synthesized facial muscle movements,” *J. Pers. Soc. Psychol.*, vol. 78, no. 1, pp. 105–118, 2000, doi: 10.1037/0022-3514.78.1.105. DOI: https://doi.org/10.1037//0022-3514.78.1.105

[9] J. Shi, S. Zhu, D. Wang, and Z. Liang, “ARM: A lightweight module to amend facial expression representation,” *Signal Image Video Process.*, vol. 17, no. 4, pp. 1315–1323, 2023, doi: 10.1007/s11760-023-02352-x. DOI: https://doi.org/10.1007/s11760-022-02339-4

[10] D. Poux *et al.*, “Dynamic facial expression recognition under partial occlusion with optical flow reconstruction,” *IEEE Trans. Image Process.*, vol. 31, pp. 446–457, 2021, doi: 10.1109/TIP.2021.3125738. DOI: https://doi.org/10.1109/TIP.2021.3129120

[11] Y. X. Tan *et al.*, “Recent advances in text-to-image synthesis: Approaches, datasets and future research prospects,” *IEEE Access*, 2023, doi: 10.1109/ACCESS.2023.3298829. DOI: https://doi.org/10.1109/ACCESS.2023.3306422

[12] B. Houshmand and N. M. Khan, “Facial expression recognition under partial occlusion from virtual reality headsets based on transfer learning,” in *2020 IEEE Sixth International Conference on Multimedia Big Data (BigMM)*, 2020, pp. 70–75, doi: 10.1109/BigMM50055.2020.00021. DOI: https://doi.org/10.1109/BigMM50055.2020.00020

[13] Javeed, M. U., Shafqat Maria Aslam, Hafiza Ayesha Sadiqa, Ali Raza, Muhammad Munawar Iqbal, & Misbah Akram. (2025). Phishing Website URL Detection Using a Hybrid Machine Learning Approach. Journal of Computing & Biomedical Informatics. Retrieved from https://jcbi.org/index.php/Main/article/view/989.

[14] M.U. Javeed, M. S. Ali, A. Iqbal, M. Azhar, S. M. Aslam and I. Shabbir, "Transforming Heart Disease Detection with BERT: Novel Architectures and Fine-Tuning Techniques," 2024 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 2024, pp. 1-6, doi: 10.1109/FIT63703.2024.10838424. DOI: https://doi.org/10.1109/FIT63703.2024.10838424

[15] Javeed, M., Aslam, S., Farhan, M., Aslam, M., & Khan, M. (2023). An Enhanced Predictive Model for Heart Disease Diagnoses Using Machine Learning Algorithms. Technical Journal, 28(04), 64-73. Retrieved from https://tj.uettaxila.edu.pk/index.php/technical-journal/article/view/1828.

[16] Aslam, S., Usman Javeed, M. ., Maria Aslam, S. ., Iqbal, M. M., Ahmad, H. ., & Tariq, A. . (2025). Personality Prediction of the Users Based on Tweets through Machine Learning Techniques. Journal of Computing & Biomedical Informatics, 8(02). Retrieved from https://www.jcbi.org/index.php/Main/article/view/796.

[17] G. Levi and T. Hassner, “Emotion recognition in the wild via convolutional neural networks and mapped binary patterns,” in *Proc. 2015 ACM Int. Conf. Multimodal Interact.*, 2015, pp. 503–510, doi: 10.1145/2818346.2830593. DOI: https://doi.org/10.1145/2818346.2830587

[18] M. R. King, “Prop'eau sable,” *Recherche-action en vue de la préparation et de la mise en œuvre du plan d'action de la zone des sables bruxelliens en application de la directive européenne CEE/91/676 (nitrates)*, 2024.

[19] Javeed, M. U., Shafqat Maria Aslam, Hafiza Ayesha Sadiqa, Ali Raza, Muhammad Munawar Iqbal, & Misbah Akram. (2025). Phishing Website URL Detection Using a Hybrid Machine Learning Approach. Journal of Computing & Biomedical Informatics, 9(01). Retrieved from https://jcbi.org/index.php/Main/article/view/989.

[20] Muhammad Usman Javeed, Hafiza Ayesha Sadiqa, Mahrukh Jaffar, Shafqat Maria Aslam, Muhammad Khadim Hussain, Zeeshan Raza, & Muhammad Azhar. (2025). A DEEP LEARNING APPROACH FOR SECURING IOT SYSTEMS WITH CNN-BASED PREDICTION OF WORST-CASE RESPONSE TIME. Spectrum of Engineering Sciences, 3(7), 376–385. Retrieved from https://www.sesjournal.com/index.php/1/article/view/599 DOI: https://doi.org/10.63075/edf5yw88

[21] Shakeel, H. ., Akram, M. ., Javeed, M. U., Azhar, M. ., Aslam, S. M. ., Saifullah, & Mumtaz, M. T. . (2025). LncRNAs Disease: A text mining Approach to Find the role of lncRNA in Aging. Journal of Computing & Biomedical Informatics, 9(01). Retrieved from https://www.jcbi.org/index.php/Main/article/view/1000

[22] Mahrukh Jaffar, “ONTOLOGY-BASED SENTIMENT ANALYSIS FOR REAL-TIME PRODUCT REPUTATION MODELING”, SES, vol. 3, no. 7, pp. 648–667, Jul. 2025.

[23] “Predicting Customer Loyalty from E-Commerce Reviews Using Aspect-Based Sentiment Analysis and ANN”, ABBDM, vol. 5, no. 3, pp. 49–61, Jul. 2025, doi: 10.62019/3akt6733.

[24] J. Anil *et al.*, “Literature survey on face recognition of occluded faces,” in *2024 7th International Conference on Circuit Power and Computing Technologies (ICCPCT)*, vol. 1, 2024, pp. 1930–1937, doi: 10.1109/ICCPCT.2024.00056. DOI: https://doi.org/10.1109/ICCPCT61902.2024.10672761

[25] J. Anil *et al.*, “Literature survey on face recognition of occluded faces,” in *2024 7th International Conference on Circuit Power and Computing Technologies (ICCPCT)*, vol. 1, 2024, pp. 1930–1937, doi: 10.1109/ICCPCT.2024.00056. [26] H. Alshazly, C. Linse, E. Barth, and T. Martinetz, “Handcrafted versus CNN features for ear recognition,” *Symmetry*, vol. 11, no. 12, p. 1493, 2019. DOI: https://doi.org/10.3390/sym11121493

[27] J. D. S. Ortega, P. Cardinal, and A. L. Koerich, “Emotion recognition using fusion of audio and video features,” in *2019 IEEE International Conference on Systems, Man and Cybernetics (SMC)*, 2019, pp. 3847–3852, doi: 10.1109/SMC.2019.8914663. DOI: https://doi.org/10.1109/SMC.2019.8914655

[28] W. Wu *et al.*, “Look at boundary: A boundary-aware face alignment algorithm,” in *Proc. IEEE Conf. Comput. Vis. Pattern Recognit.*, 2018, pp. 2129–2138. DOI: https://doi.org/10.1109/CVPR.2018.00227

[29] Q. Q. Oh, C. K. Seow, M. Yusuff, S. Pranata, and Q. Cao, “The impact of face mask and emotion on automatic speech recognition (ASR) and speech emotion recognition (SER),” in *2023 8th International Conference on Cloud Computing and Big Data Analytics (ICCCBDA)*, 2023, pp. 523–531, doi: 10.1109/ICCCBDA.2023.10105092. DOI: https://doi.org/10.1109/ICCCBDA56900.2023.10154691

[30] J. Gao, J. Yi, and Y. L. Murphey, “Multi-scale space-time transformer for driving behavior detection,” *Multimedia Tools Appl.*, vol. 82, no. 16, pp. 24289–24308, 2023, doi: 10.1007/s11042-023-15129-5. DOI: https://doi.org/10.1007/s11042-023-14499-7

[31] D. Liu, Y. Liu, S. Li, W. Li, and L. Wang, “Fusion of handcrafted and deep features for medical image classification,” in *J. Phys.: Conf. Ser.*, vol. 1345, no. 2, p. 022052, 2019, doi: 10.1088/1742-6596/1345/2/022052.

[32] J. Shi, S. Zhu, and Z. Liang, “Learning to amend facial expression representation via de-albino and affinity,” *arXiv preprint arXiv:2103.10189*, 2021.

[33] D. Poux *et al.*, “Facial expressions analysis under occlusions based on specificities of facial motion propagation,” *Multimedia Tools Appl.*, vol. 80, pp. 22405–22427, 2021, doi: 10.1007/s11042-021-11050-1. DOI: https://doi.org/10.1007/s11042-020-08993-5

[34] K. Vasudeva and S. Chandran, “A comprehensive study on facial expression recognition techniques using convolutional neural network,” in *2020 International Conference on Communication and Signal Processing (ICCSP)*, 2020, pp. 1431–1436, doi: 10.1109/ICCSP48568.2020.9182108. DOI: https://doi.org/10.1109/ICCSP48568.2020.9182076

[35] R. M. Al-Eidan, H. Al-Khalifa, and A. Al-Salman, “Deep-learning-based models for pain recognition: A systematic review,” *Appl. Sci.*, vol. 10, no. 17, p. 5984, 2020, doi: 10.3390/app10175984. DOI: https://doi.org/10.3390/app10175984

[36] L. M. Darshan and K. B. Nagasundara, “A survey on disguise face recognition,” *J. Chin. Inst. Eng.*, vol. 47, no. 5, pp. 528–543, 2024, doi: 10.1080/02533839.2024.0000000. DOI: https://doi.org/10.1080/02533839.2024.2346494

[37] D. Liu, Y. Liu, S. Li, W. Li, and L. Wang, “Fusion of handcrafted and deep features for medical image classification,” in *J. Phys.: Conf. Ser.*, vol. 1345, no. 2, p. 022052, 2019, doi: 10.1088/1742-6596/1345/2/022052. DOI: https://doi.org/10.1088/1742-6596/1345/2/022052

[38] J.-J. Liu, Q. Hou, and M.-M. Cheng, “Dynamic feature integration for simultaneous detection of salient object, edge, and skeleton,” *IEEE Trans. Image Process.*, vol. 29, pp. 8652–8667, 2020, doi: 10.1109/TIP.2020.3020789. DOI: https://doi.org/10.1109/TIP.2020.3017352

[39] E.-G. Lee, I. Lee, and S.-B. Yoo, “ClueCatcher: Catching domain-wise independent clues for deepfake detection,” *Mathematics*, vol. 11, no. 18, p. 3952, 2023, doi: 10.3390/math11183952. DOI: https://doi.org/10.3390/math11183952

[40] H. A. Amirkolaee, D. O. Bokov, and H. Sharma, “Development of a GAN architecture based on integrating global and local information for paired and unpaired medical image translation,” *Expert Syst. Appl.*, vol. 203, p. 117421, 2022, doi: 10.1016/j.eswa.2022.117421. DOI: https://doi.org/10.1016/j.eswa.2022.117421

[41] [F. Zhang], “Deep convolutional neural networks for multicultural facial expression recognition,” *[Journal Name]*, vol. [Volume], no. [Issue], pp. [Pages], 2023.

[42] [F. Liu], “Hybrid feature fusion with CNNs for multicultural faces dataset,” *[Journal Name]*, vol. [Volume], no. [Issue], pp. [Pages], 2022.

[43] [F. Kim], “Transfer learning with ResNet for multicultural facial expressions dataset,” *[Journal Name]*, vol. [Volume], no. [Issue], pp. [Pages], 2021.

[44] G. Devasena and V. Vidhya, “A study of various algorithms for facial expression recognition: A review,” in *2021 International Conference on Computational Intelligence and Computing Applications (ICCICA)*, 2021, pp. 1–8, doi: 10.1109/ICCICA.2021.00012. DOI: https://doi.org/10.1109/ICCICA52458.2021.9697318

[45] J. E. T. Akinsola, O. Awodele, S. O. Kuyoro, and F. A. Kasali, “Performance evaluation of supervised machine learning algorithms using multi-criteria decision making techniques,” in *Proc. Int. Conf. Inf. Technol. Educ. Dev. (ITED)*, 2019, pp

Downloads

Published

Issue

Section

License

Copyright (c) 2025 Muhammad Nauman, Muhammad Usman Javeed, Muhammad Talha Jahangir, Shiza Aslam, Muhammad Khadim Hussain, Zeeshan Raza, Shafqat Maria Aslam

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.